The past few months I’ve been working to move more of my work into JavaScript, and in particular the node.js ecosystem. I hope that by doing this I will be able to create better demos and applications that are easier to use as well as develop.

Numerical Computing in a Hostile Environment

But despite the numerous practical advantages to working in JavaScript (especially from a user experience perspective) there are still many things that JS just sucks at. For me the most painful of these is the weak support for numerical computing and binary data types. These problems are due to fundamental limitations of the language, and not something that can be papered over in a library. For example, in JS there is:

- No pass-by-value for non-primitive data types

- No way to control memory alignment for members of objects

- No way to restrict references to pointers/prevent aliasing

- No way to ensure arrays of objects are allocated in place

- No operator overloading

- No array operations

- No SIMD instructions and data types

- … etc.

This situation is bad, but the benefits of using JS from an accessibility perspective are just too big to ignore, and so it is at least worth it to try as much as can be done within the limitations of the system. Moreover, these obstacles are by no means unprecedented in the world of computing. As an instructive example, consider the Java programming language: Java suffers from exactly the same design flaws listed above, and yet it is a viable platform for numerical computing with an enthusiastic community (though it is still nowhere near as fast as more efficiently designed languages like Fortran/C99). So, it is a plausible hypothesis that JavaScript could achieve similar results.

Now, reimplementing say LAPACK or SciPy is a huge project, and it is not reasonable to try doing it in a single article or even within a single library. To help focus our inquiries, let us first restrict the scope of our search. Instead, let us study today on the fundamental problem of implementing multidimensional arrays.

Multidimensional arrays

Multidimensional arrays are a natural first step towards building systems for solving numerical problems, and are a generally useful data type in their own right, with applications in graphics and vision. In a language like JavaScript, there are multiple ways

Arrays-of-Arrays

Perhaps the most popular way to represent higher dimensional arrays in JavaScript is as arrays-of-arrays. For example,

var A = [[1, 0, 0],

[0, 1, 0],

[0, 0, 1]]

This is the approach that numeric.js uses, and it has some merits. For example, the syntax for accessing elements in an array-of-arrays is quite pleasingly simple:

var x = A[i][j]

And it does not require introducing any extra data structures or conventions into the language.

However, while arrays-of-arrays are by far the simplest solution, from a performance perspective they are disastrous. The most obvious problem is that to allocate a d-dimensional array-of-arrays with O(n) elements per each element, you need to store extra bytes worth of memory in pointers and intermediate arrays. Additionally, accessing an element in an array-of-arrays requires

dependent memory dereferences. These operations can’t be easily pipelined and are terrible from a cache performance perspective. If performance is even remotely close to being an objective, then we need to try for something better.

Typed Arrays

Fortunately, in both JavaScript and Java, there is a better alternative to arrays-of-arrays: namely typed arrays. Using a typed array lets us guarantee that the data for our array is arranged contiguously with no wasted space. For example, here is how we would create a typed array for the same matrix described earlier:

var A = new Float32Array([1, 0, 0, 0, 1, 0, 0, 0, 1])

The downside is that accessing elements from a typed array is a bit more complicated. To do this we need to agree on some convention up front about how the elements in the array are arranged in memory. In languages like C, typically this is done in colexicographic (also known as row major order), while other languages like Fortran use a lexicographic ordering (also called column major order). The choice between the two is totally arbitrary, but once you pick one you have to stick with it. Assuming a row major ordering then, we can access an element from the array using the formula:

var x = A[3 * i + j]

Typed arrays can be very fast, but they are a bit clumsy to work with. You need to keep track of the number of both what convention you are using to store the array as well as what its dimensions are, which is an extra blob of data that needs to be passed around and slows things down substantially.

Strided Arrays

Strided arrays solve these problems by considering array storage in a more general form. Instead of keeping a fixed convention for indexing, they write the index of an element in array as a general linear function of its coordinate. For example,

var x = A[ c0 * i + c1 * j + ... ]

The result is that they achieve the same performance as flattened typed arrays, but gain the advantage that they can be easily sliced, transposed or reversed just by changing the stride. For example, if we wanted to switch the x/y axes of an image, all that we would need to do is swap the strides:

var x = A[ c1 * i + c0 * j + ... ]

Setting a stride to a negative value can be used to reverse an axis, and changing the shape of an array lets us easily crop out subarrays from higher dimensional arrays.

Introducing ndarray

At least theoretically, strided arrays sound like a great idea and so to see how well they worked in practice I created the following library:

https://github.com/mikolalysenko/ndarray

Essentially, an ndarray is an object that has 4 pieces of data:

- data – The underlying typed array

- shape – The shape of the ndarray

- stride – The stride of the ndarray

- offset – A pointer to the first element in the array

To look up an item in an ndarray you use the formula:

data[stride[0] * i[0] + stride[1] * i[1] + ... + offset]

You can download it and try it out yourself using npm, or check out some projects already built on top of ndarray here.

But is it fast?

To see how each of these multidimensional array representations compare, I decided to compare their performance on a simple component-wise operation for various shapes of arrays. In pseudocode, this operation looks something like:

function test(A, B) {

for(i in range) {

A[i] += B[i] + 0.1

B[i] -= A[i] * 0.5

}

}

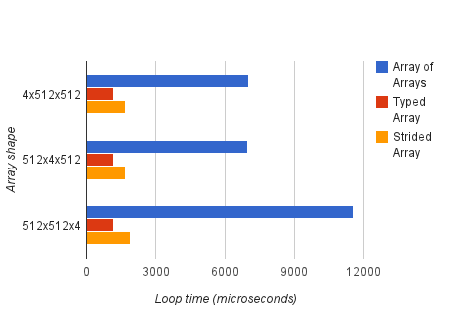

I ran this simple loop using different variations of multidimensional arrays with various shapes. The following chart summarizes the results:

You can find more detailed results and further experiments (including direct comparisons using numeric.js) at the github page for this project:

https://github.com/mikolalysenko/ndarray-experiments

These results are somewhat typical, indexing in an ndarray is a little slower than directly walking over a typed array, but not by much. For arrays of arrays, the performance gets progressively worse as the first axes get larger, but even in the best case they trail far behind data structures built on typed arrays.

Next time

The natural question to ask is can we do even better? And the answer is yes. The key to getting further performance improvements is to batch operations together allowing for full array comprehensions. I’ll discuss this in the next post, but for now you can take a look at my current implementation of bulk operations on ndarrays in the cwise library.

(Do you need performance numbers for column-major accesses vs row-major accesses? That is, differently strided arrays could have pretty different performance characteristics when used in gemm-style matrix multiplication, right?)

Nowadays, I think the best prospect for numerical computing on Javascript comes from asm.js (http://asmjs.org/). It would be a great project to figure out how to write a Javascript array library that maps well to asmjs.org primitive calls in the backend. At the very least, a straightforward port of CBLAS through emscripten should give good performance — around that of Java — and the asm.js guys are even talking about automatic vectorization nowadays!

It looks like your cwise library is already very much in this direction; it’d be very interesting if it compiled its comprehensions to asm.js modules.

Thanks for the comment! I think that targeting asm.js would be pretty interesting, but there are some real problems with interfacing native JS and asm.js, since each asm.js module gets its own personal view of a heap. In order to make ndarrays work in asm.js, they would need a custom allocator that is tied to some shared asm.js heap. This is not unmanageable, but it is complicated. I like the idea of eventually doing this though, but I think that there is going to be a huge amount of work in build all of the necessary tooling. Maybe I will get around to writing up some ideas on that later once (or if) asm.js becomes more stable.

A big issue with multi_array.js is an artifact of how V8 tries to automatically unbox arrays of floating point values.

If in initArray you add

line[0] = 0.1;

line[0] = 0.0;

after initialization loop that creates line then you will see sudden and surprising speedup (at least on a recent enough V8) 🙂

the issue here is that V8 creates line as an array of integers (0 is an integer after all) and for some reason is unable to hoist transition (integer elements -> double elements) that happens in the benchmarking loop out of the loop confusing all other optimizations.

Also in this particular benchmark: A[i][j][k]/B[i][j][k] is not three dependent loads at all because A[i][j]/B[i][j] are invariants of the innermost loop.

Thanks! That’s interesting to know, though I tried making the modification to initArray you suggested and it ended up slowing down the benchmark a lot. It is interesting though that it had such a huge effect on performance. If you think you can speed up the experiments, send a pull request and I’ll add it in!

I was checking effect on the TOT V8, it might be that older V8’s behave differently (though I am not sure why). Which node.js version are you using?

I checked V8 3.14 which is what node.js 0.10 is supposed to be using.

Back in the day slice did not preserve the type of the backing store so you need to do:

ri[j][0] = 0.1;

ri[j][0] = 0.0;

after ri[j] = line.slice(0), instead of applying this trick to line itself (though it is less efficient obviously).

[I am sorry for not sending a pull request but it’s a bit too involved for 2 lines :-)]

Yeah, it doesn’t make a difference if I do it before or after the slice. It seems that using that initialization trick slows down the run time loop quite a bit. For example, here are the numbers I got running the test on my machine:

Running experiment with dimensions = 16 x 16 x 16

Proposal #1 array of native arrays Total Init = 2ms Execution = 28ms

Proposal #2 array of native arrays (fast init) Total Init = 2ms Execution = 45ms

Running experiment with dimensions = 64 x 64 x 64

Proposal #1 array of native arrays Total Init = 4ms Execution = 1606ms

Proposal #2 array of native arrays (fast init) Total Init = 39ms Execution = 7449ms

Running experiment with dimensions = 512 x 512 x 4

Proposal #1 array of native arrays Total Init = 123ms Execution = 6757ms

Proposal #2 array of native arrays (fast init) Total Init = 427ms Execution = 31354ms

Running experiment with dimensions = 512 x 4 x 512

Proposal #1 array of native arrays Total Init = 8ms Execution = 6484ms

Proposal #2 array of native arrays (fast init) Total Init = 130ms Execution = 28059ms

Running experiment with dimensions = 4 x 512 x 512

Proposal #1 array of native arrays Total Init = 11ms Execution = 6458ms

Proposal #2 array of native arrays (fast init) Total Init = 120ms Execution = 28353ms

Running experiment with dimensions = 2 x 2 x 2048

Proposal #1 array of native arrays Total Init = 0ms Execution = 49ms

Proposal #2 array of native arrays (fast init) Total Init = 3ms Execution = 115ms

Running experiment with dimensions = 2048 x 2 x 2

Proposal #1 array of native arrays Total Init = 2ms Execution = 55ms

Proposal #2 array of native arrays (fast init) Total Init = 7ms Execution = 110ms

It is interesting that it has such a pronounced effect on the performance, but using the 0.1/0.0 method to initialize the arrays definitely slows things down. If you think it can be made faster, then please offer a suggestion.

(By the way I am running the latest version of node)

I don’t understand what is going on.

Here is what I see:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

gistfile1.txt

hosted with ❤ by GitHub

(first measurement without a patch, second measurement is with the patch, diff itself is applied to the clean version of your experiments github repo).

It would be very interesting to figure out what is the difference between our setups.

Can it be that you have some local changes unpushed local changes?

Can you obtain a IR trace (hydrogen*.cfg) from your run with –trace-hydrogen (with and without my proposed patch) and post it somewhere?

@mraleph: Double and triple checked it, no unpushed changes. Where would you like me to upload the –trace-hydrogen output? Is email ok?

(Also, I made a separate test case for the initialization stuff so you can try it out yourself.)

Yep, just drop me an email to me@mrale.ph

Please in multi_native_fast_init.js remove this line:

line[i] = 0.1;

then you will see speed up 🙂

I don’t think I need hydrogen trace now.

Awesome! I tried it out and got a pretty massive speed up. Now the multidimensional array version is almost as fast as using typed arrays!

Very nice post! I’ve not seen a whole lot of information on typed and strided arrays, thanks for including some useful information on the subject.

Any suggestions on any other places to read about typed/ strided arrays? The MDN articles don’t seem to explain the concepts very well.

There is really not much to understand about typed arrays beyond the MDN articles, and the strided array concept is basically explained on this article/the wiki link. The main difference between a strided array vs. the way most languages implement multidimensional arrays is that a strided array does not presume the underlying ordering of the items. This gives it a huge amount of extra flexibility at the expense of a (small) increase in run time overhead (ie you have to pass around the shape and the stride).

Ok, thanks! I think I just have to read more into the practical usage of typed arrays.

if you don’t know about it, look at numpy (a mature python array library), you have many things in common.

Valuable information. Fortunate me I found your site unintentionally, and I’m surprised why this twist of fate did not took place in advance!

I bookmarked it.

Hi thanks for sharing thiis